I got this idea when I was working on The Selenium Project, I made some bots to automate daily tasks. I thought what if we can automate a simple profile search and then filter out all the duplicate profiles, and in this post, I’ll explain my approach.

The Selenium Project

When I started to experiment with python & selenium, I thought what if we can automate the way we browse the internet, just write some code to login, follow, like, click a button, and automate these.

Scraping LinkedIn

I chose LinkedIn because I thought it has less spam content.

When I first started automating standard LinkedIn interactions like login, follow and view profiles, I realized that collecting profiles was easy but determining their authenticity was tough and can be biased.

- LinkedIn detects bot activity

- We cannot view everyone’s profile

- Actions/searches per day are limited

Detecting Fake Profiles

To identify a fake profile we need to collect a lot of information and keep track of all the activities of a user such as their posts, likes, engagement rate, profile picture, and many other activities.

What next?

We just can’t go after every random profile we find and judge its authenticity. However, after some manual search and research, I found out that there were some easy targets like celebrities and CEOs whose names are unique and often misused.

Here’s what I did

- Pick a famous profile

- [Automation starts]

- Search their name

- Collect the result data

- Match the profiles with the exact user name

- I managed to gather 50-75 Profiles per search

- Not all, but a majority [90%] were fake/spam

- I chose CEOs because there were many imposters!

Let’s get to the code now!

Requirements

- Python 3+

- Selenium [pip install selenium]

- Chrome Web Driver [Download]

We can also use Firefox or Edge. Remember, our Chrome Driver and Chrome Browser version must be the same.

Code to Collect Fake Profiles on LinkedIn

I used Python, Selenium, and Chrome Web Driver.

Start Session

from selenium import webdriver

import time

driver = webdriver.Chrome("C:/WebDrivers/chromedriver.exe")

driver.get('https://www.linkedin.com/uas/login')Login

text_area = driver.find_element_by_id('username')

text_area.send_keys([email protected])

text_area = driver.find_element_by_id('password')

text_area.send_keys(your_password_here)

submit_button = driver.find_elements_by_xpath('//*[@id=app__container]/main/div/form/div[3]/button')[0]

submit_button.click()If the X-Path of submit button doesn’t work you can find a new one easily

- We use the element id and Xpath to enter data and click buttons

- Right-click on the required element button/field

- Select Inspect element option

- In the HTML code look for the id of the button

id starts with a ‘ # ‘

Looks something like this #button-login

Sometimes the id method doesn’t work then repeat the same i.e right click and find the XPath of the required element

1. Profile Link Collector

List

s=[]

s=["Amitab Bachan","Jeff Bezos","Mark Zuckerberg"]

l=(len(s))Search and crawl

Searches for similar user names and, prints the exact matches.

def search(m):

driver.get("https://www.linkedin.com/feed/")

search=driver.find_element_by_xpath("//*[@id=\"ember41\"]/input")

search.send_keys(m,"\n")

time.sleep(5)

print("Seraching",m)

res=[]

for a in driver.find_elements_by_xpath('.//a'):

res.append(a.get_attribute("href"))

n=""

n=m.lower()

n=n.replace(" ","-")

print(n)

for i in range (len(res)):

if n in res[i]:

print(res[i])Iterator & Result

for i in range(len(s)):

z=s[i]

search(z)2. Brute Force Collection

This code too collects profiles but, focuses entirely on one name with more results like 50-70 links per search, by visiting through all the search results pages and thus gathering more profile data per search query.

m="Jeff Bezos"

final_list=[]Super Crawler and Link collection

The only thing that makes this script better is that this code first collects all links and then applies filters to match the exact keywords/names we’re looking for. A typical search may offer 3-4 result pages, however some names might give more results.

def search(m):

num=1

driver.get("https://www.linkedin.com/feed/")

search=driver.find_element_by_xpath("//*[@id=\"ember41\"]/input")

search.send_keys(m,"\n")

time.sleep(5)

print("Seraching",m)

res=[]

for a in driver.find_elements_by_xpath('.//a'):

res.append(a.get_attribute("href"))

n=""

n=m.lower()

n=n.replace(" ","-")

print(n)

for i in range (len(res)):

if n in res[i]:

print("Link ",num," : ",res[i])

num=num+1

final_list.append(res[i])

current=driver.current_url

j=2

for i in range(5):

j=j+1

res=[]

driver.get(current+"&page="+str(j))

time.sleep(2)

for a in driver.find_elements_by_xpath('.//a'):

res.append(a.get_attribute("href"))

n=""

n=m.lower()

n=n.replace(" ","-")

for i in range (len(res)):

if n in res[i]:

print("Link ",num," : ",res[i])

num=num+1

final_list.append(res[i])

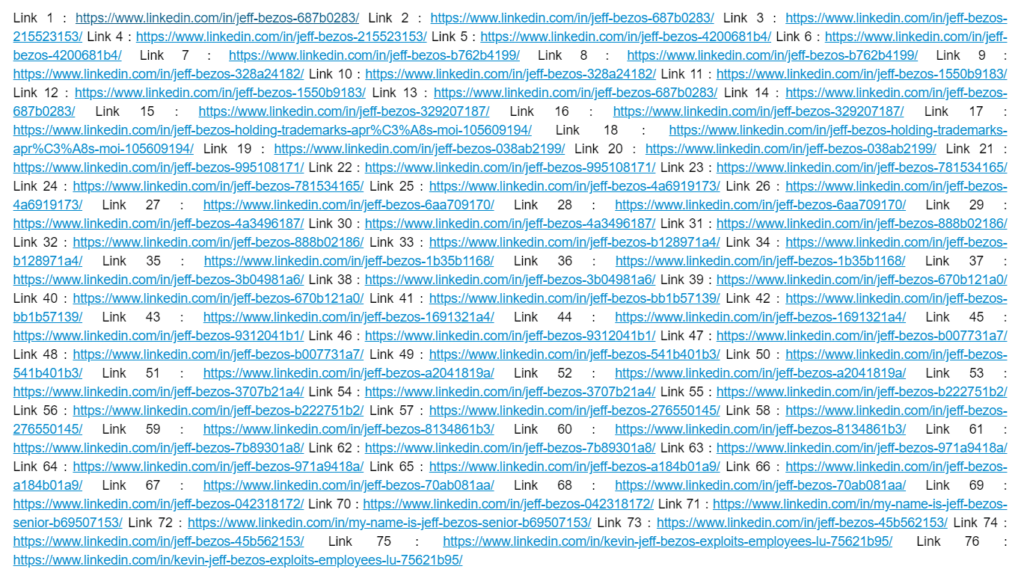

search(m)The Result – Collects 70+ Profiles with exact match

for x in range(len(final_list)):

print(x," : ",final_list[x])Sample Result Data

Searching Jeff Bezos

Yes, we are using a unique name [Jeff Bezos] to search and I believe the chances are very slim for a person to be named the same.

Note: This is an experimental project, data scraping and excessive search might ban your LinkedIn account use this for Educational purpose only, cheers have fun.

This post is published was part of my project called The Selenium Project, where I automate the boring stuff using python and selenium mostly. If you like it, check out and drop a star for the GitHub Repository

- More articles on Selenium Bots 🤖